report in seconds

1. Structure beats content volume: LLMs extract snippets via RAG systems, not read full articles. Your content structure determines if AI finds and cites you, regardless of ranking on Google.

2. Five critical technical tasks: Set granular crawler access (robots.txt), use server-side rendering (not JavaScript), create llms.txt file, structure content for chunking (one idea per header), and implement schema markup (JSON-LD).

3. Answer-first HTML hierarchy: First 40-60 words after each header must directly answer the question. Use simple declarative syntax and data tables instead of paragraphs.

4. Information gain wins citations: Original data, proprietary research, and expert quotes signal new information to LLMs. Press releases with original data get cited more than those without.

5. AI referrals convert 34% better: Companies optimizing for LLMs see higher conversion rates from AI traffic because prospects are pre-qualified by AI's recommendation process.

You rank first on Google for 'project management software for distributed teams.'

Your product is better than the competition.

Still, when people ask ChatGPT for a project management tool, it suggests your competitors, such as Asana, Monday.com, and ClickUp, and leaves you out.

The issue is not your content quality but the way in which how Large Language Models (LLMs) search algorithms pull information from your site.

LLMs use Retrieval-Augmented Generation systems to break documents into segments, check each segment for relevance and citations, and keep only what they can support.

If your content structure does not match what LLMs look for, your site will not show up in their results, no matter how much you have optimized for traditional search.

This article gives you the practical steps for the Generative Engine Optimization (GEO) series. While the main page explains why GEO matters, here you will find specific ways to optimize the website for LLMs so it can be found and cited by AI.

Traditional search engines prefer long-form, in-depth content. AI platforms, on the other hand, look for facts and use them to build their answers. This difference is now the key reason why your top-ranking content might not get cited.

Did you know?

Google's original algorithm used 17 different ranking factors.

These included the number of backlinks, how many relevant keywords appeared, user engagement, and the authority of the page or domain. The ranking score decided if users will click on your content.

Now, with web pages treated more like products, almost 58% of Google searches return an AI-generated summary instead of sending users to your site.

If someone asks an AI for the best CRM for a small law firm, the AI turns the question into a vector, scans millions of text snippets, and picks the most relevant ones.

It then combines these into a short answer.

Note: AI pulls together pieces from your content and your competitors’ content without visiting the site!

The problem is that SEO-optimized content often includes too much fluff and repeats keywords throughout the document. For example, many sites spend several paragraphs talking about the 'evolving landscape in XYZ domain' before explaining what their service actually does.

This might work for human readers who scroll through your site.

But when a large language model scans those same paragraphs, it may find low-value information, unclear definitions, or no direct answer to the user's question.

The result? AI will skip your content and you will miss out on visibility!

Especially as 74% of sales representatives now use AI to research products.

And, that's precisely why even top-ranked sites on Google often get no citations from AI.

Large Language Models (LLMs) process information differently from people. They do not skim-read, get tired, or notice aesthetics.

LLMs retrieve information based on structure, so your content must be organized for machine indexing. This optimization protocol provides a clear process for updating your content.

Goal: Ensure AI crawlers can easily find, read, and understand your data.

This basic phase is intended to remove the technical barriers of search engines. An LLM will not find or read your information unless it can be efficiently located. The internet equivalent, then, of providing your ’store’ with a front door.

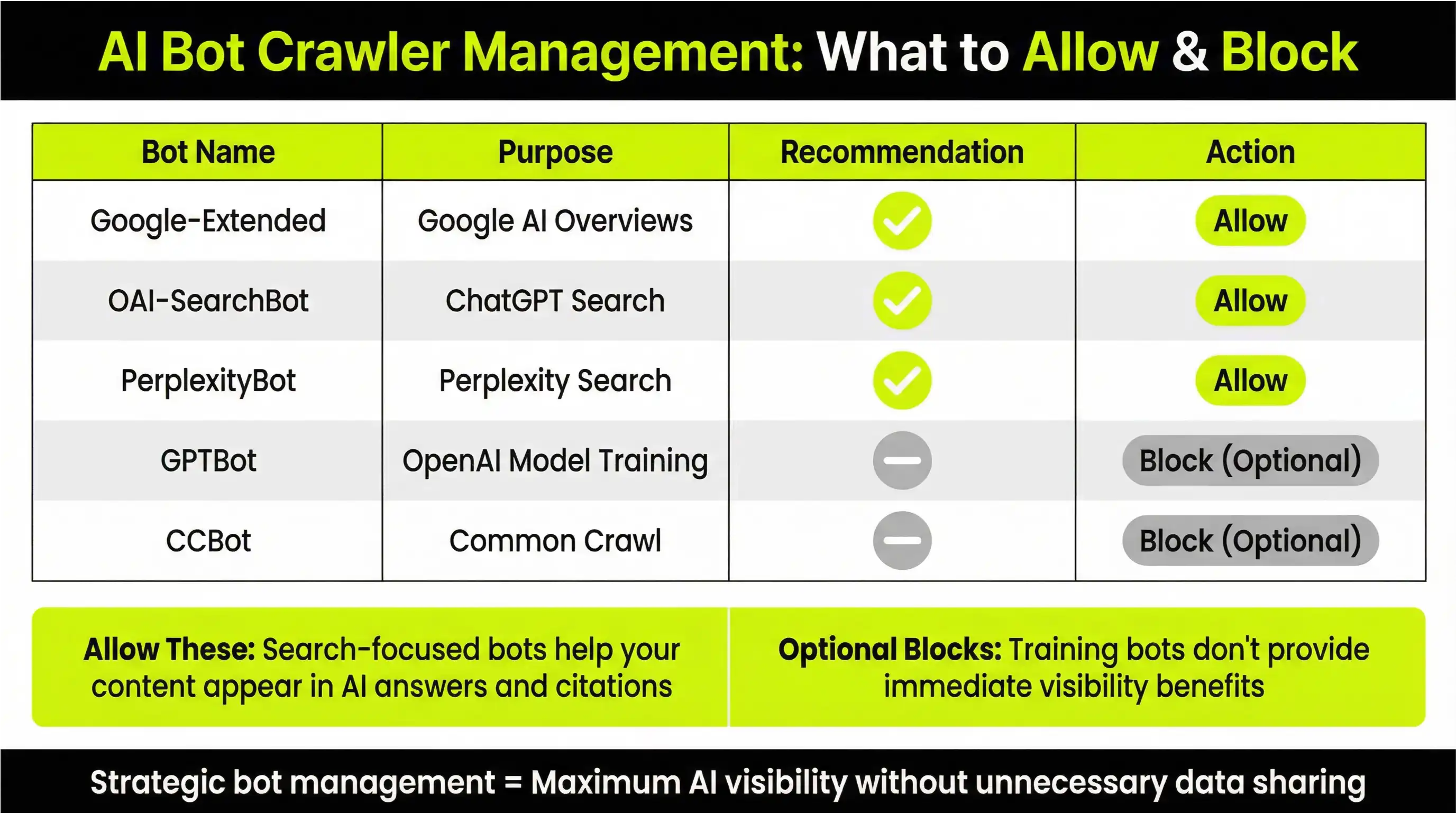

Bots are not all built the same.

It’s easy to separate between bots that gather data in order to create models from those that are used to provide results for live search queries.

A recent analysis of nearly 180 million citation records showed how distinctly different platforms behave when circulation is limited, validating the critical need for bot management.

Implementation

Edit your robots.txt file to provide selective access for high-value data retrieval bots by blocking access for training bots if protecting your business is important.

By using this technique, your content can appear in AI results as soon as it goes live. At the same time, you can keep your proprietary information safe from competitors' models.

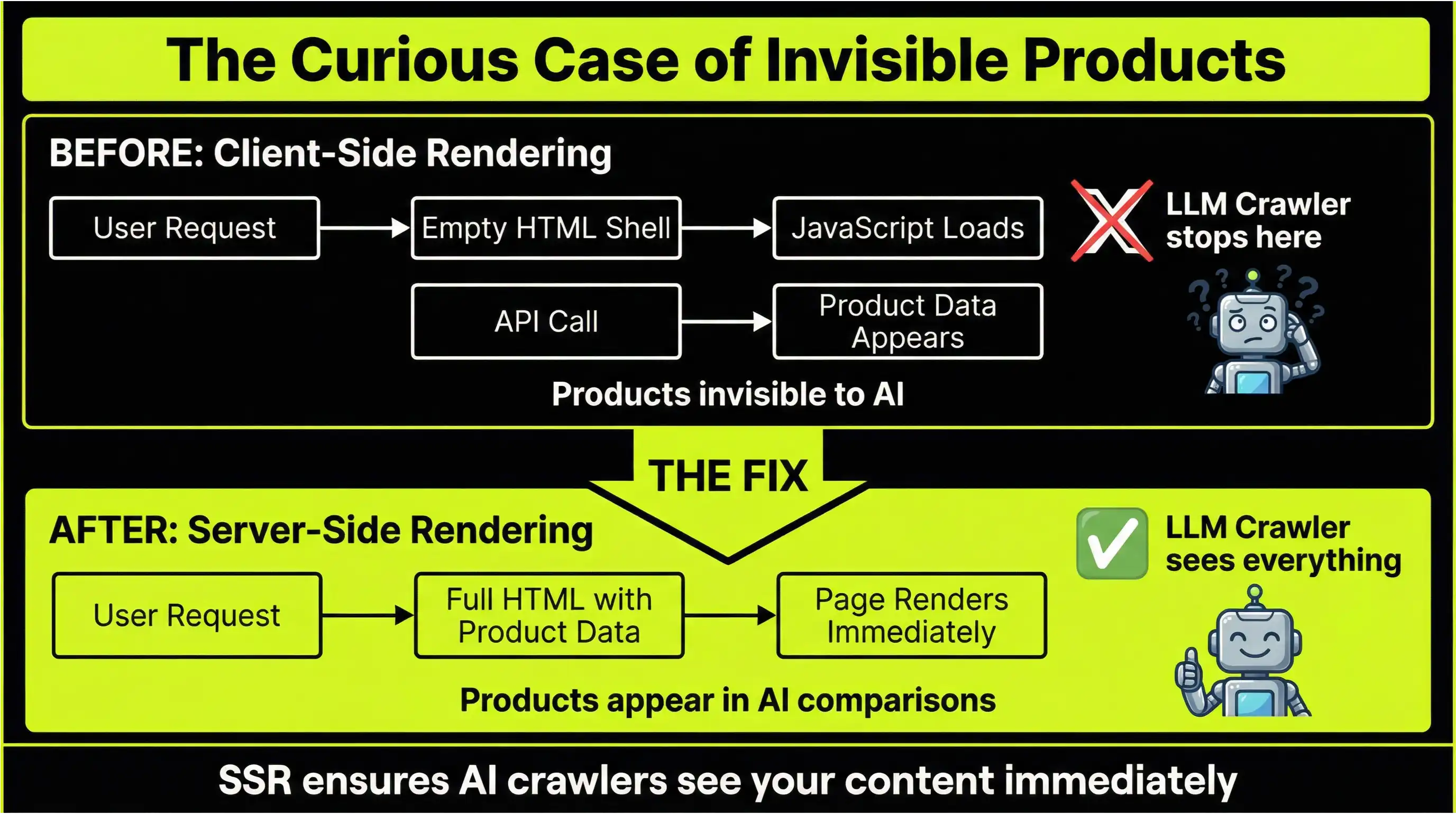

AI tools struggle with heavy JavaScript, unlike Googlebot. If your important data is hidden behind client-side rendering, AI may miss it or fill in the blanks with errors. Make sure all key text, definitions, and data tables are included in the initial static HTML response.

The Curious Case of an Invisible Product

One e-commerce business launched new products, but they did not appear in AI shopping assistants. The site used a popular JavaScript framework, and all product details were loaded from a client-side API after the page loaded.

Users and Googlebot could see everything, but LLM crawlers only saw a loading spinner. The fix was to switch to Server-Side Rendering so the full HTML loaded right away. After making this change, the products started appearing in AI-generated product comparisons within weeks.

llms.txt adoption is still new and uneven, but early research from the Developer Marketing Alliance shows it can improve the accuracy and relevance of AI-generated answers.

Getting started now signals to LLMs that your content is credible and up to date. As more AI platforms roll out their own crawling rules, being among the first to use llms.txt could give you an edge.

It gives LLMs a simple table of contents and points them to your best, most reliable content. This helps AI tools find what matters and saves them time.

Implementation

Create a file called llms.txt and put it at yourdomain.com/llms.txt. Use the outline below to list your most important content, services, and policies.

# LLM Instructions for ethicalseo.io

## Core Content

- [What is Generative Engine Optimization (GEO)?]

(/what-is-generative-engine-optimization-geo)

- [Optimizing Content for LLMs: The Definitive How-To Guide]

(/optimizing-content-for-llms)

## Services

- [GEO Services]

(/geo-services)

- [LLM Content Optimization Services]

(/llm-content-optimization-services)

## About

- [About Us](/about)

User-agent: *

Disallow: /private/

Allow: /blog/

Sitemap: https://ethicalseo.io/sitemap.xml

Goal: Create an HTML structure for passage-level indexing and extractability

LLMs read web pages as layers of HTML nodes. If you build clear semantic structures, you help AI break your content into useful pieces, score it, and cite it more easily.

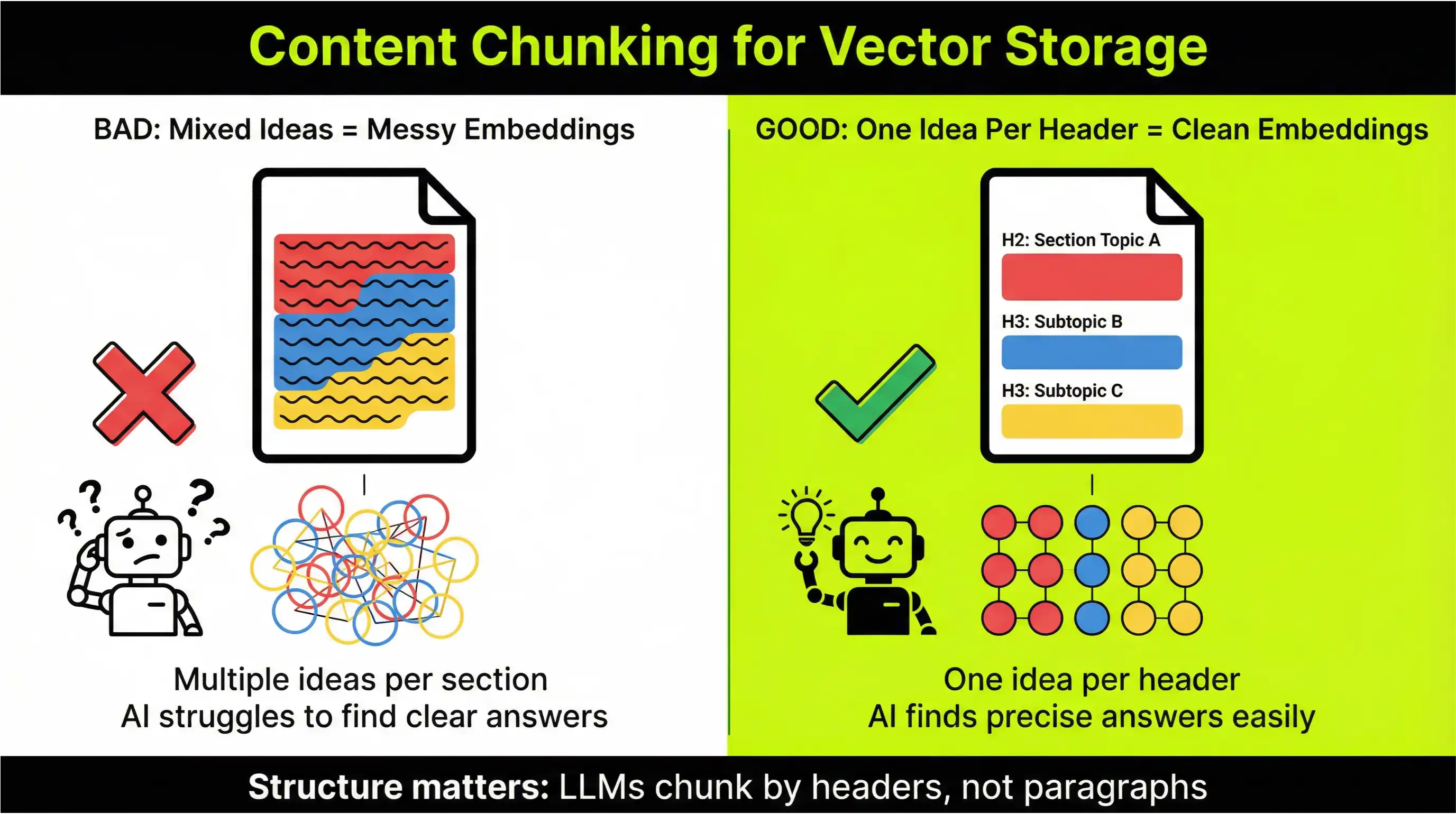

LLMs split documents into chunks for vector storage.

If a paragraph mixes too many ideas, the AI creates messy embeddings and struggles to find a clear answer. Research shows that chunking content based on your document’s structure works better than just splitting text by length.

Implementation

LLMs usually pull snippets from the first 40 to 60 words after a header. This is where they look for the most direct, citable answer.

Implementation

<h2>What is the Difference Between GEO and SEO?</h2>

<p>Generative Engine Optimization (GEO) focuses on getting your content cited and mentioned in AI-generated answers, while Search Engine Optimization (SEO) focuses on ranking in traditional search results to drive traffic.</p>

<h3>Core Objectives</h3>

<ul>

<li>GEO: Influence and appear in AI-generated summaries.</li>

<li>SEO: Achieve top rankings on search engine results pages.</li>

</ul>

Large language models can read HTML tags to compare features, prices, or specs. This makes it more likely that your data will appear when someone searches to compare products, such as "I want to compare the price of HubSpot and Salesforce."

A B2B software review site switched from writing out comparisons in text to using HTML tables. After making this change, they saw a 40% jump in AI-generated citations for "X vs. Y" searches, since the tables were easier for models to read.

Goal: Use the "Language of Entities" to make your content clearer and more consistent.

Schema markup does not help you rank higher, but it removes confusion for LLMs by clearly describing your content. While it may not change how your content is found, it affects how it is understood once it is retrieved.

Over the next few years, LLMs will increasingly rely on structured data, such as JSON-LD, to answer questions and make decisions.

Schemas Needed

{

"@context": "https://schema.org",

"@type": "Organization",

"name": "Ethical SEO",

"url": "https://ethicalseo.io",

"logo": "https://ethicalseo.io/logo.png",

"sameAs": [

"https://www.linkedin.com/company/ethical-seo",

"https://twitter.com/ethicalseo"

]

}

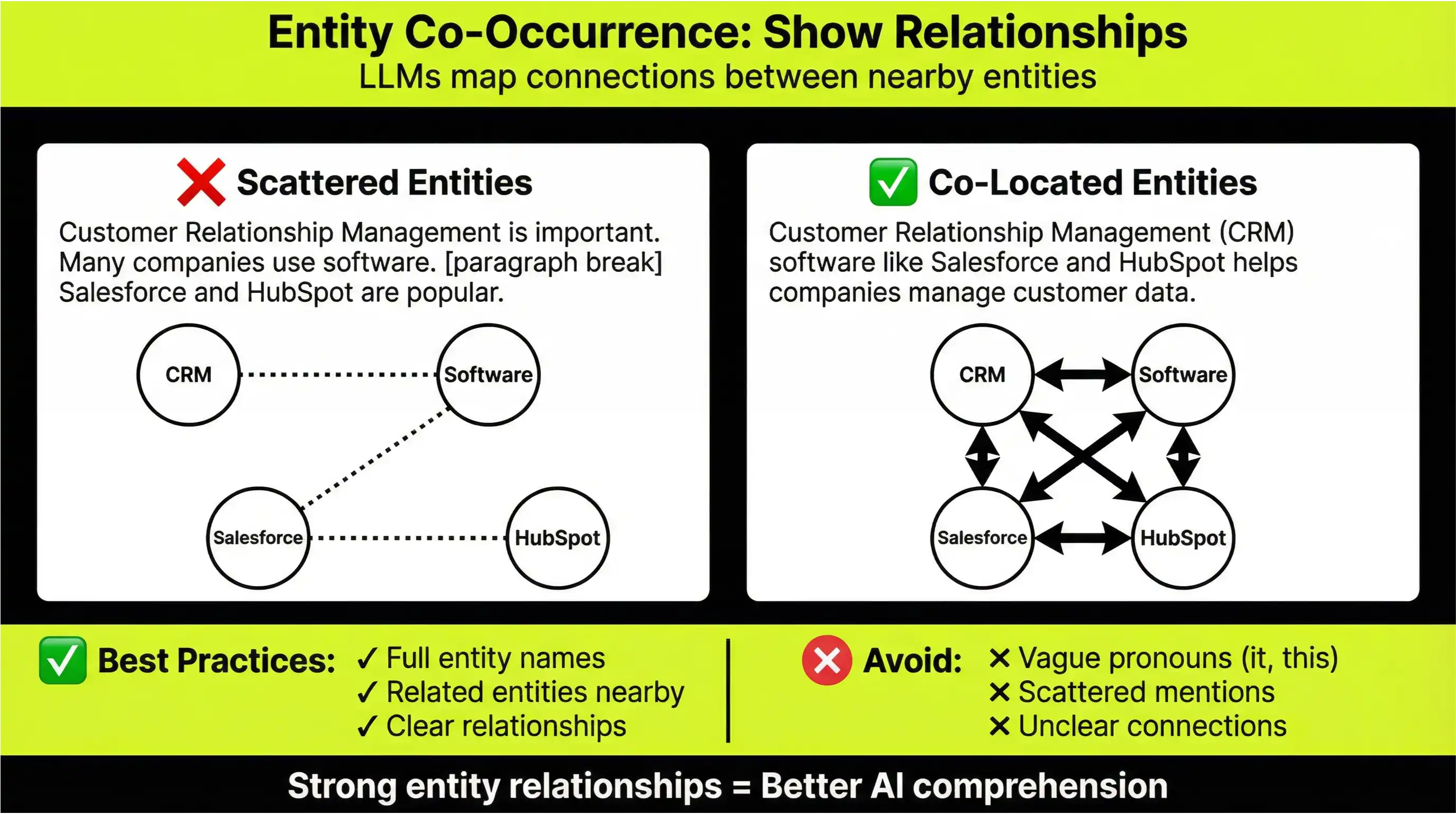

LLMs make sense of text by connecting entities like people, places, and ideas. Your job is to show those links clearly.

Implementation

Goal: Build with Processing Fluency and Information Gain

At this stage, focus on writing clearly and using technical formatting for AI search. Aim to create content that people like to read and that AI can process without trouble.

LLMs work best with clear, predictable language and standard grammar. If your writing is complicated or full of fluff, the model gets confused and is less confident in its answers.

Implementation

RAG systems use re-rankers to highlight content that gives new information not already in the model’s training set. This gives you a real edge over the competition.

Implementation

While much of this work is manual, there are several AI SEO tools and technologies that make optimizing LLMs easier. Even if you handle most tasks by hand, you can use tools to speed up your workflow and check your results.

An e-commerce company ranked well on Google for its target keywords but was almost invisible in AI-generated recommendations.

When prospects asked tools like ChatGPT or Perplexity for the best CRM for small businesses, the company’s products were not mentioned. This was true for its five main competitors as well, even though this company offered quality products at competitive prices.

Despite strong Google rankings, the company’s content was written only for human readers and Google’s algorithms. They had no clue how to rank on ChatGPT.

As a result, LLMs could not retrieve clear information about the products. Product headings were not clearly defined, their features were hidden in long sentences, and unique data that set their products apart was missing.

This project was completed using an incremental optimization process, with multiple phases of completion.

Semantic HTML

Schema and Structured Data

Content Engineering

Drawing from Ahrefs' research, which shows that AI Overview updates nearly 46% of the time and can be tracked at scale, we gained valuable insight into which content strategies drive consistent citations and visibility.

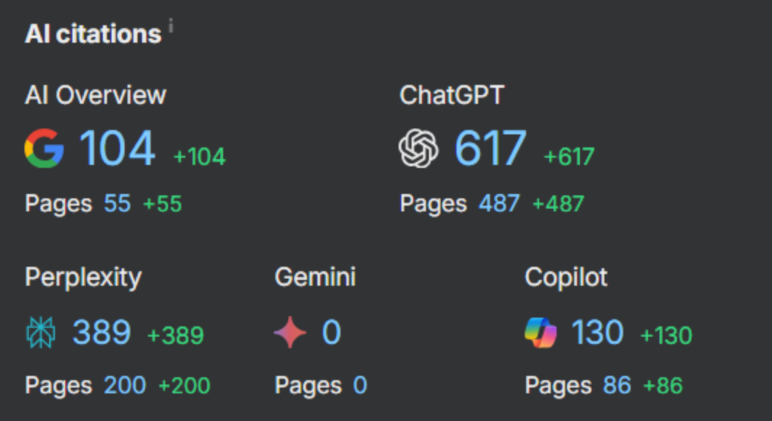

Using the same Ahrefs methodology, our team tracked citations across Google AI Overview, ChatGPT, Perplexity, Gemini, and Copilot to show how our four-phase optimization program delivers results. In the last four months, our optimization project has led to noticeable gains in AI citation metrics across all major LLM platforms.

Business Impact: More AI citations led to more qualified leads. Prospects who found the company through AI recommendations converted 34% more often than those from traditional search, since the AI had already pre-qualified them.

Key Takeaway: Optimizing for LLMs does more than increase visibility. It allows reputable AI systems to endorse your products through their review process.

This guide showed you the ropes for optimizing your content for large language models. Use this framework to help AI systems find and cite your work. The digital landscape is shifting, and sites that do not adapt risk losing traffic in the coming years. If you want your content to stand out in AI Search, reach out to us.

Book a free strategy call with our GEO expert team. We will audit your current content and show you what it takes to become a leading authority in AI Search.